Optimizing Database Performance for Faster Web Applications

In today’s digital world, businesses need to have a robust online presence to remain competitive. Web applications have become an essential tool to interact with customers. Thus, they require efficient and fast database performance. Whether you’re a developer, IT manager, or business owner, read on to discover how to optimize it effectively!

What is database optimization for web applications

Before answering the main question i.e. how to optimize database performance, let’s talk about databases in general. So, what are they?

It is a storage system that keeps all the information a specific app needs i.e. details about users, products, or orders. This is where your web product gets the information to display it on the website, process transactions and store user inputs.

It must be secure as it contains sensitive info. This includes protecting against SQL injection attacks, storing passwords properly, and regularly backing it up in case anything goes wrong.

Speaking about database optimization, it is the process of improving its efficiency and speed, so the app handles a large number of operations without slowing down or crashing. It also helps to reduce the overall load on the server and improve app’s response time.

If it is not optimized

Slow page load times occur if queries (i.e. requests sent to the DB to perform an action) take too long to retrieve data, resulting in a delayed response from the server.

Slow query execution leads to long wait times for visitors. It impacts the level of their engagement and eventually results in lost revenues.

Crashes occur if the DB becomes overloaded with such requests or if it runs out of memory.

You may also experience a negative impact on SEO. The reason is search engines prioritize websites that load quickly.

Ein-des-ein: our step-by-step process of database optimization

✓ We evaluate your DB to identify possible inefficiencies. Here, we usually analyze DB size, user queries, system architecture and other parameters.

✓ We create a strategic indexing plan, its main goal is to help you improve query response times. Our team will help you create, modify, or remove indexes based on query performance analysis for optimal data retrieval.

✓ We review and refine SQL queries to achieve better execution speed. Here, we help you rewrite complex queries, remove unnecessary columns, use efficient operators and joins to reduce processing time.

✓ We restructure the database, the goal here is to minimize data redundancy and achieve data integrity. We help you organize tables and relationships, using normalization techniques up to the desired form.

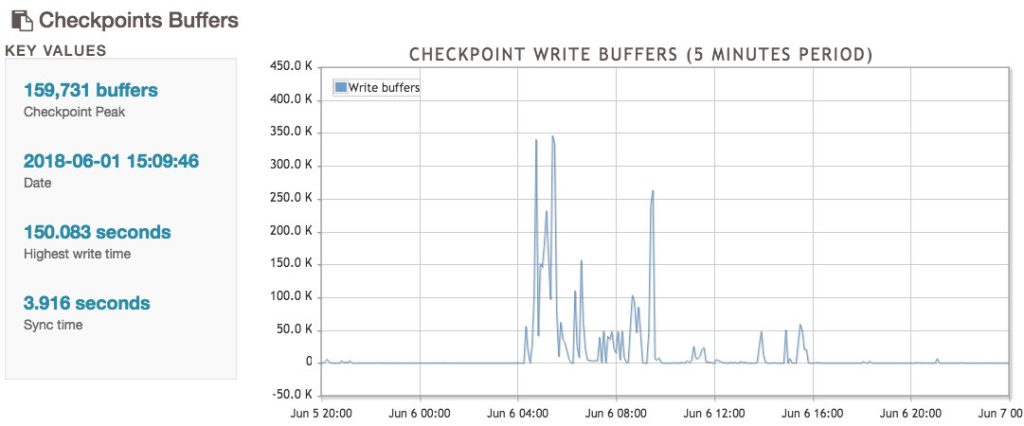

✓ We adjust server resources and configure settings so they would meet the database demands. This includes optimizing CPU, memory, and storage allocations so the database could run efficiently even under peak loads.

✓ We implement monitoring systems, so you could regularly track DB performance metrics without any complications.

Web Database Optimization Techniques

Query optimization

It ranges from simple requests, such as retrieving data from a single table, to complex ones that involve joining multiple tables and filtering information based on specific criteria. The aim here is to reduce the time it takes to retrieve requested info.

Analyze queries to identify inefficient ones by using profiling tools, checking execution plans, and monitoring server resources. Try to minimize the use of subqueries and prefer JOINs if possible.

The slow query log feature in MySQL enables you to log the ones that exceed a predefined time limit. This greatly simplifies the task of finding inefficient or time-consuming queries.

Use MySQL’s EXPLAIN statement

Thanks to it, you gain valuable insights into the queries execution plan and find potential bottlenecks. Here are the steps you should take while working with EXPLAIN here:

- Add EXPLAIN before the SELECT statement of the slow query. This will output details about its execution plan.

- Analyze the output i.e. the order of table access, index usage, and estimated row count.

- Based on the analysis, optimize the query by adding indexes, rewriting it, or adjusting configuration settings.

- Rerun it. Benchmarking or profiling will help you make sure the changes have led to an improvement.

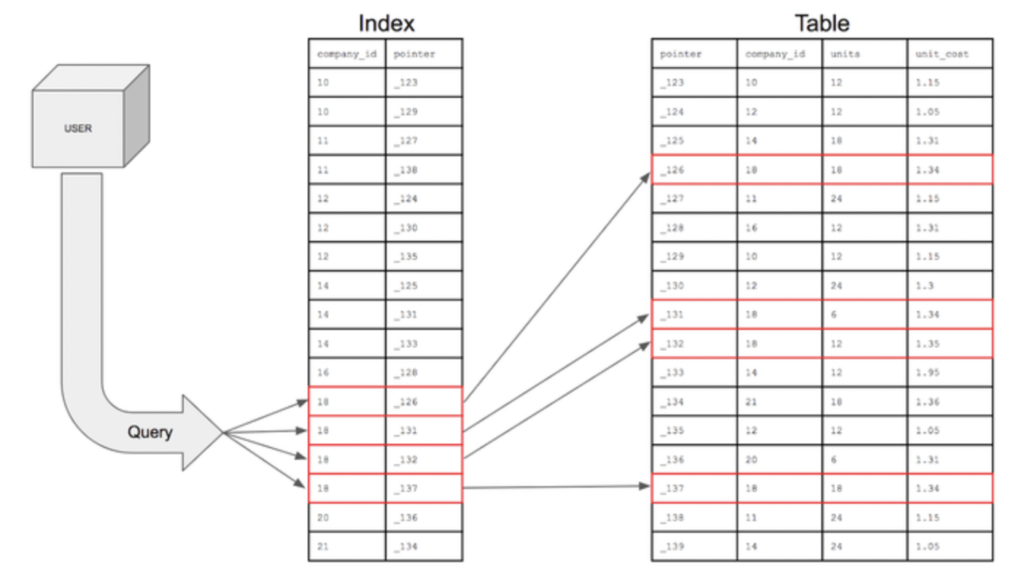

Indexing

An index is like an ordered list of pointers to the actual data in the DB. When a query is executed, the DB uses indexes to quickly locate the requested info, rather than having to search through the entire DB.

By creating indexes on frequently accessed columns, you significantly speed up the retrieval process. But, avoid over-indexing, as it slows down insert.

Caching

It involves storing frequently accessed information in memory, rather than retrieving it from the DB every time it is needed.

It is particularly useful for web products that have a lot of traffic or access the same info repeatedly. Plus, the app remains responsive even during peak traffic times.

Server configuration

Proper configuration ensures that the server utilizes resources efficiently. It also handles heavy traffic loads without slowing down or crashing. Do not forget to:

- Allocate sufficient resources so the server has sufficient CPU, memory, and disk space to handle the expected load.

- Configure DB settings such as buffer and cache sizes, and connection limits to match the expected workload.

- Consider optimizing hardware such as selecting solid-state drives (SSDs) for faster read and write speeds or network interface cards (NICs) with higher bandwidth.

- Enable compression. It reduces the size of data transmitted between the server and clients, reducing network bandwidth requirements.

Compression

Compress data before its storage to reduce the amount of disk space required. However, it’s important to balance the benefits of compression against the additional processing time required to compress and decompress data.

Choose among these common methods:

- Text compression algorithms (GZIP or DEFLATE) for text data such as HTML, XML or JSON.

- Binary compression algorithms (LZO or Snappy) for images or audio files.

- Column-level compression i.e. data is compressed at the column level rather than at the table one. This provides more fine-grained control over compression.

- Filegroup compression where data is compressed at the filegroup level. Choose this method if the information is infrequently accessed.

- External tools (7-Zip or WinRAR) provide a high degree of compression but may require additional processing time.

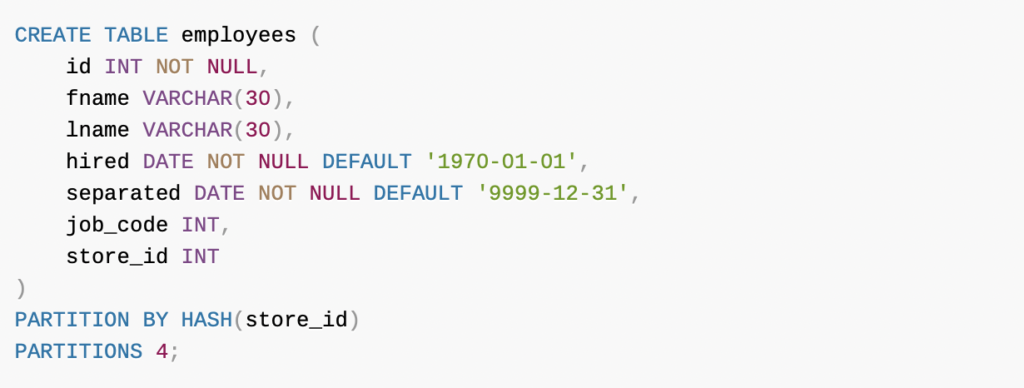

Partitioning

The process means splitting large tables into smaller, more manageable pieces. It speeds up query execution and reduces the risk of data loss.

Main steps:

Choose a partition key i.e. a column/set of columns used to divide the table into partitions. It should be carefully chosen to ensure it evenly distributes the data across the partitions.

Determine the method – range, hash, or list partitioning. The choice depends on data characteristics and desired goals.

Create the partitions. This typically involves creating multiple physical tables, each corresponding to a partition.

Configure partition maintenance. It involves managing partitions, such as adding or removing them, based on changes in the data. This can be automated using scripts or database management tools.

It’s important to regularly monitor partition performance to identify any issues. Monitor disk usage, query performance, and partition maintenance tasks.

Load balancing

Distribute web traffic across multiple servers to improve performance and reliability. Load balancing ensures no single server is overloaded with traffic, resulting in faster response times.

Batch Operations

Batch operations enable multiple DB operations to be executed together. It reduces the number of DB/app round-trips. You can implement batch operations using a variety of techniques, including:

Stored procedures i.e. pre-compiled DB functions that can be called by the application to execute multiple operations in a single round-trip;

Object-relational mapping (ORM) frameworks such as Hibernate or Entity Framework;

Raw SQL queries (but, ensure they are properly optimized and secure to prevent SQL injection attacks).

To implement BOs, follow these steps:

- Identify operations that can be grouped together into a batch.

- Use the appropriate technique to group them together.

- Monitor the performance to identify any bottlenecks.

Database optimization tools

The following tools help businesses fine-tune their DBs for optimal work. However, carefully evaluate the pros and cons of each option. Thus, GUI-based tools may be resource-intensive and have a steeper learning curve than command-line ones. Additionally, some of them require licensing fees or do not support certain DBs. Select the one that best fits your specific needs!

PgBadger

A PostgreSQL log analyzer. It provides insights into query performance, server activity, and more. It also generates detailed reports.

Results: you will be able to analyze PostgreSQL log files and identify performance bottlenecks.

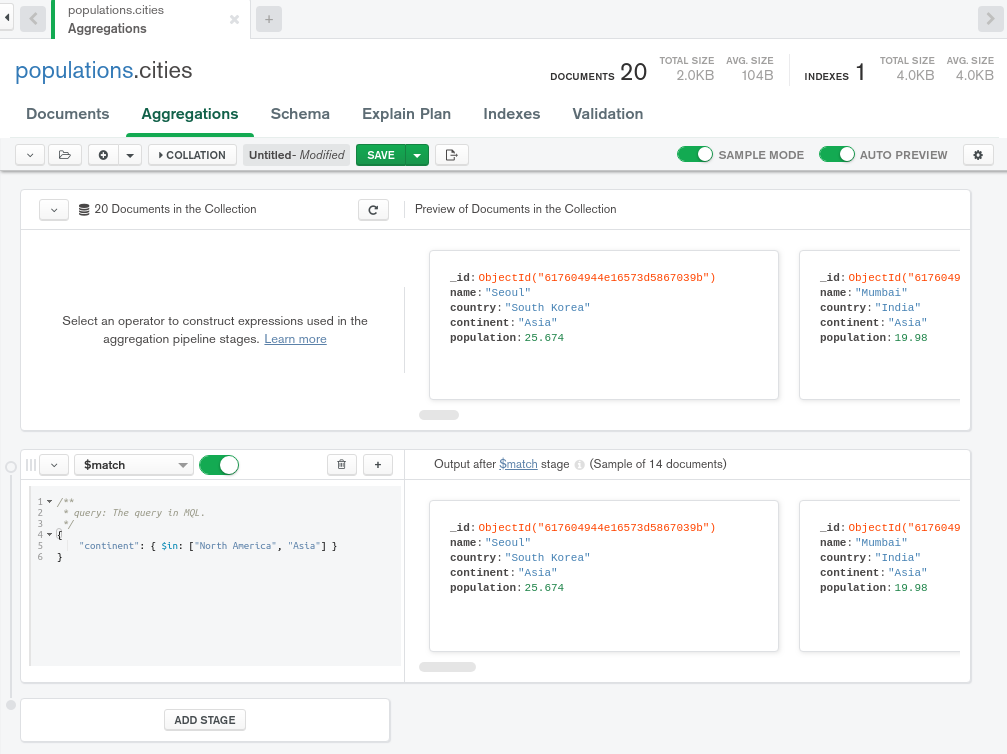

MongoDB Compass

A GUI instrument for managing MongoDB databases. It offers features such as data visualization, document validation, and more.

Results: you will be able to analyze query performance and utilize tools for schema optimization and index management.

Percona Toolkit

A suite of command-line instruments for MySQL and MariaDB. It helps with tasks such as backups, query profiling and more!

Results: you will be able to effectively manage and optimize MySQL and MongoDB databases through query analysis, replication management, and performance tuning.

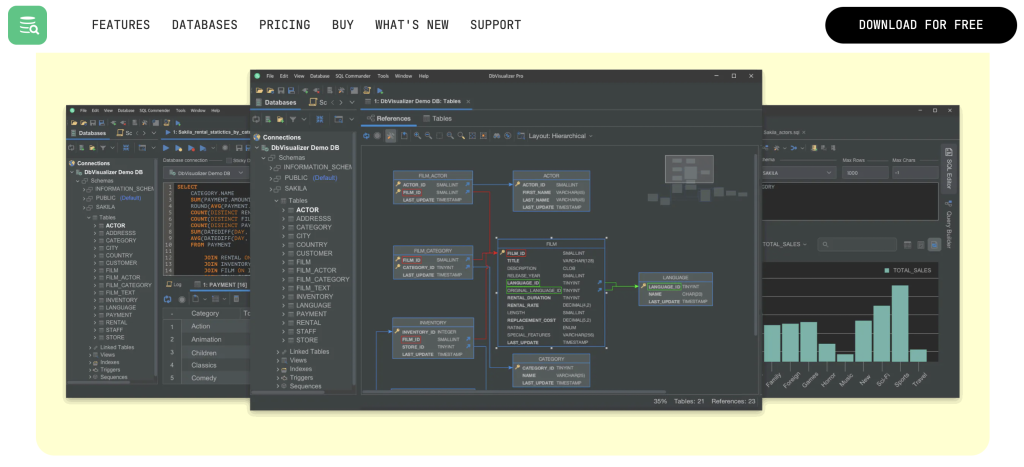

DBVisualizer

A multi-platform DB management tool. It supports several popular DBs, including MySQL, PostgreSQL, Oracle and Microsoft SQL Server. It provides features such as data analysis, SQL editing, and schema browsing.

Results: you’ll gain comprehensive database management capabilities, improved query performance, and access to powerful visualization tools.

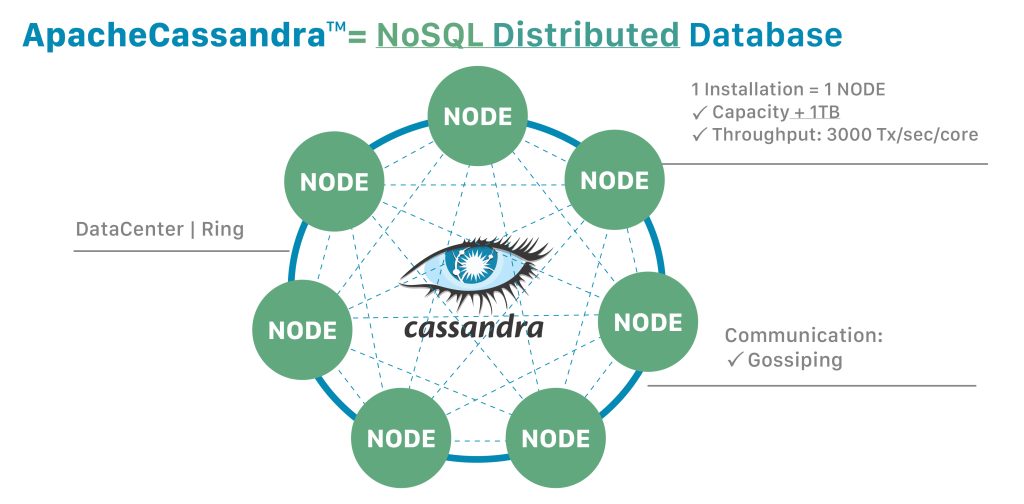

Apache Cassandra

A distributed NoSQL DB that is optimized for high scalability. Offers horizontal scaling, replication, and fault tolerance features.

Results: you’ll get the ability to manage large datasets across distributed systems, ensuring your database is highly available, scalable, and fault-tolerant!

Book a free consultation with our experts to figure out which tool fits best for your case!

Conclusion

Effective DB optimization requires a deep understanding of various aspects, from data structures to information transfer. Implementing the methods described above will help your application run swiftly and efficiently, giving it a competitive edge in the market. However, if you’re unsure where to start, how to correctly execute certain processes, or how to measure the results, EDE is ready to assist you with this crucial task. Contact our managers to schedule a free consultation!

FAQ

What is database optimization and why is it important for web applications?

It is the process of optimizing database performance and efficiency. It involves various database optimization techniques such as:

- creating indexes

- implementing caching mechanisms

- connection pooling and more.

For web apps, database performance optimization is crucial. That’s because it impacts the responsiveness of the app. If companies do not improve database performance, consequences may be fatal. It leads to a poor user experience, loss of customers, and ultimately harms financial indicators. Thus, a proper database performance management approach is crucial to maintain proper db performance results.

What are the common performance issues that can arise in database-driven web applications?

Common issues that arise in such database-driven apps include:

- slow queries

- lack of indexing

- insufficient hardware resources

- network latency

- poor design

- inefficient caching mechanisms.

The good news is there are various database tuning techniques which help to optimize database properly. Indexing, proper server configuration and other methods all change the situation and lead to the high performance database.